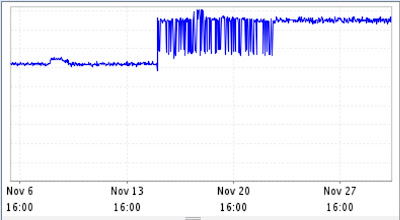

The topic of software performance and efficiency has been making rounds this month, especially around engineers not being able to influence their leadership to invest in performance. For many engineers, performance work tends to be some of the most fun and satisfying engineering projects. If you are like me, you love seeing some metric or graph show a step function improvement - my last code commit this year was one such effort, and it felt great seeing the results:

However, judging by the replies on John Carmack's post, many feel they are unable to influence their leadership to give such projects appropriate priority. Upwards influence is a complex subject, and cultural norms at individual companies will have a big impact on how (or if) engineers get to influence their roadmaps. However, one tool that has been useful for me in prioritizing performance work is tying it to specific business outcomes. And when it comes to performance and efficiency work, there are multiple distinct ways they could impact the business, and I think it's worth being explicit about those.

Performance is a feature

We frequently see this in infrastructure services like virtualized compute, storage, and networking. Databases and various storage and network appliances are another example. Performance of these products is frequently one of the dimensions customers evaluate, and that tends to shape product roadmaps. This is also where we see benchmarks that fuel performance arms race, and never-ending debates of how representative these benchmarks are of the true customer experience. In my experience, teams working in these domains have the least trouble prioritizing performance related work.

Better performance drives down costs

We tend to see this in distributed systems a lot. By improving the performance of distributed system's main unit of work code path, we can increase the throughput of any single host. That in turn decreases the count (or size) of hosts needed to handle the aggregate work that the distributed system faces, driving down the costs. One obvious caveat is that many distributed systems have a minimum footprint that is needed to meet their redundancy and availability goals. A lightly loaded distributed system that operates within that minimum footprint is unlikely to see any cost savings from performance optimizations, at least not without additional and often non-trivial investments that would allow the service to "scale to zero."

Better performance improves customer experience

This category of performance projects improve customers experience, typically by improving the latency or quality of some process. Perhaps the best known example is website load times, but any other process where humans (or machines!) wait for something to happen is a good candidate. Yet another example from my youth is video games - the same hardware would run some games much smoother and at higher resolution than others. This was typically the case for any game where John Carmack had a hand!

The biggest challenge in advocating for this category of performance improvement projects is quantifying the impact. Over the past decades multiple studies demonstrated strong correlation between fast page load times and positive business outcomes. However, gaining that conviction required a number of time and effort consuming experiments, an investment that may be impractical for most teams. And even then, at some points the returns likely become diminishing. Will a video game sell more copies if it runs at 90 fps rather than 60?

Besides time consuming A/B studies, one of the best ways to advocate for these kinds of performance improvement projects, in my experience, is to deeply understand how the customers use your product. Then you can bring concrete customer anecdotes to make the case.

Better performance reduces availability risks

These types of performance projects tend to come up anytime we have performance modes in our system, that is the behavior where some units of work are less performant than others. An example of that could be an O(N²) algorithm, which sometimes faces large Ns. Such inefficiencies in the system create a situation where a shift in the composition of various modes can tip the system over the edge, creating a DOS vector. Throttling and load shedding more expensive units of work is one common approach, however improving their performance to be on par with the rest can be even more effective.

One way to identify and drive down these kinds of performance modality issues is by plotting tail and median latencies on the same graph, or perhaps even using fancier graph types like histogram heatmaps. Seeing multiple distinct modes in the graph is a telltale sign that your system has such an intrinsic risk.

Performance has security impact

Security sensitive domains are unique because more important that the raw performance is the lack of performance variability. This is because any variability can expose potential side channels to the attacker. For example, if an encryption API takes different lengths of time depending on the content being encrypted, then a determined adversary can use that timing information as a side channel to infer information about the plaintext that is being encrypted. Luckily, very few teams are writing code with that kind of blast radius. The amount of expertise and considerations that go into getting such code right is truly mind boggling!

Conclusion

Like all classifications, this one is imperfect. For starters, a project can fall into multiple categories. For example, improving P50 request latency on a web service is likely to both improve customer experience and also service costs. But like they say, "All models are wrong, some are useful", and hopefully this model will be useful in helping folks tie performance work to desirable business outcomes.